While doing programming challenges in Advent of Code, I came across an interesting behavior of LLMs in coding assistants and decided to write about it to clear my thoughts.

First some background.

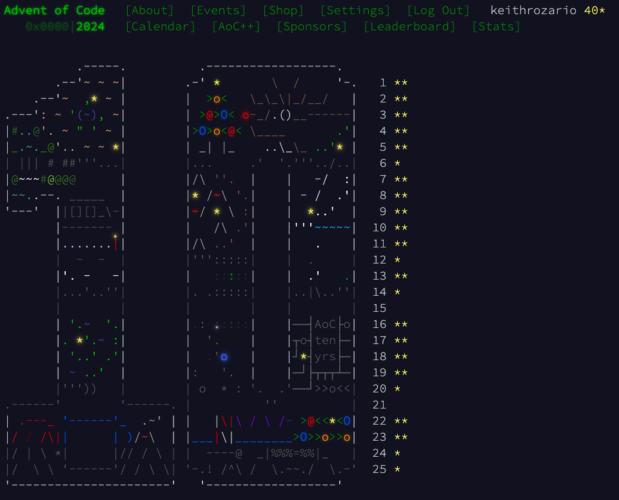

Advent of Code is a series of daily coding challenges released during the season of advent (the period just before Christmas). Each challenge has 2 parts, and you must solve part 1 before the part 2 is revealed. Part 2 is harder than Part 1, and usually requires re-writes to solve. Sometimes quite extensive rewrites, and others they are small incremental steps.

If you haven't done these challenges before, I encourage you to try. None of them are easy (at least to me), but all of them solvable with enough elbow grease and time.

That said, the challenges are still contrived. Firstly, the questions are much better written that what you'd see in a Jira ticket or requirements document,. They include a detailed description of what must be done, and sample inputs and outputs you can test. Secondly, the challenges extend beyond what most coders do on a daily basis, one challenge required writing a small program to 'defrag' a disk, another required building a tiny assembler that ran it's own program, and multiple questions involved you navigating a 2D maze with obstacles along the way. All fun things you will probably not do as a programmer in the real world.

I took on the challenges, both to improve my coding skills, and to learn how I could use coding assistants like in these close to real-world scenarios. The hope was I would gain some insight into how I could use these tools more effectively should I need to do something more than solving contrived programming challenges before Christmas.

OK. Background complete.

Let's move onto the challenge that changed the way I would look at LLMs forever.