The Apple vs. FBI story has evolved so much in the past weeks, I thought I needed to write a separate post just on the updates. Admittedly, the story is far more complex and nuanced that I initially presumed, and everyone wants to be part of the conversation.

On one side, we have the silicon valley tech geeks, who seem to be unanimously in the corner of Tim Cook and Apple, while on the other we have the Washington D.C policy makers, who are equally supportive of James Comey and the FBI whom he directs.

But to understand this issue from a fair and balanced perspective, we need to frame the correct question, not just what the issue about, but who is the issue really focused on.

This isn’t just about the FBI or Apple

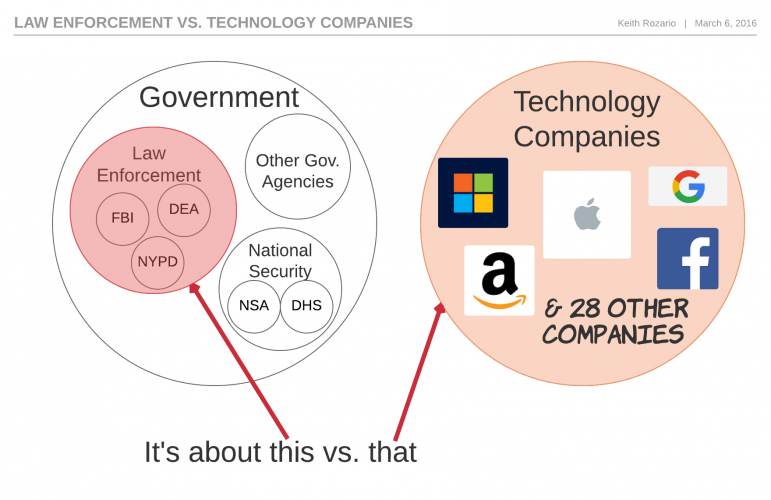

Framing this as the FBI vs. Apple or The Government vs. Apple is wrong. This is Law Enforcement vs. Tech Companies.

The FBI is just a part of the The Government, specifically the part tasked with investigating federal crimes.James Comey, FBI director, is genuinely trying to do his job when he uses the All Writs Act to compel Apple to create a version of iOS that would allow them to brute-force the PIN code.

But there are other parts of The Government, like the NSA, who have the wholly different task of national security. To them, if a smartphone, is genuinely secured from FBI, then it’s secured from Russian Cybercriminals and Chinese State Sponsored actors too (probably!).

And because so much data are on smartphones, including the smartphones of federal government employees, the national security interest of America is better protected by having phones that are completely unbreakable, rather than ones the provide exceptional access to law-enforcement. Exceptional being defined as, no one has access except for law enforcement, and perhaps TSA agents, maybe border patrol and coast guard–you can see how slippery a slope ‘exceptional’ can be. Oh and by the way, exceptional doesn’t exist in end-to-end encryption.

Former NSA director, Michael Hayden, has openly said “I disagree with Jim Comey. I actually think end-to-end encryption is good for America”. So it appears the NSA has an interest of national security that competes with the FBIs interest of investigating crimes.

The Government isn’t a single entity with just one interest, rather it is a collection of agencies with sometimes competing objectives, even though they all ultimately serve their citizens. Experts believe the NSA has the capability to crack the iPhone encryption easily, but are refusing to indulge the FBI, because–well it’s hard to guess why the NSA don’t like the FBI.

Susan Landau, a member of Cybersecurity hall of fame (yes it does exist), detailed two methods the FBI could hack the iPhone in her testimony to House Judiciary Committee. Both methods involved complicated forensics tools, but would cost a few hundred thousand dollars (cheap!) , and wouldn’t require Apple to write a weakened version of iOS. If the goverment can get into the phone for $100,000 , that would mean it couldn’t compel Apple under the All Writs Act (AWA).

Remember, the FBI buy their spyware from the lowlifes at hacking team, which means they’re about as competent as the MACC and Malaysian PMO, but if Comey and Co. can afford $775,000 on shit from Hacking Team, I’m guessing $100,000 for a proper computer forensics expert isn’t a problem.

But maybe there’s an ulterior motive here, at the very recently concluded Brooklyn iPhone case, Magistrate Judge Orenstein noted that necessity was a pre-requisite for any request made under AWA, and if the FBI have an alternative for a reasonable price, then Apple’s support was not necessary, and hence outside the ambit of the AWA. So maybe the NSA isn’t providing the support to necessitate the NSA.

An this isn’t singularly about the FBI either. The New York A-G is waiting for this case to set precedent before he makes request for the 175 iPhones he’s hoping to unlock for cases that aren’t related to terrorism or ISIS. You can bet he’s not the only A-G waiting for the outcome, and it’s highly unlikely for the Judge to make her ruling so specific that nobody except the FBI could use it as precedent.

But it’s also not just about Apple. The legal precedent set by this case would apply not just to every other iPhone, but possibly every other smartphone, laptop, car or anything else we could squeeze into the definition of a computer. This is about more than Apple, and that’s why the tech companies are lining up in support of Mr. Cook, 32 such companies the last I checked.

But now that we’ve framed the ‘who’ , let’s frame the ‘what’.

The ‘one’ phone

Apple’s legal response to the court order, starts with the sentence “This is not a case about one isolated iPhone”, and they’re right. The most probable outcome is that the legal precedent set, would apply to all phones, laptops, wearables and just about everything considered ‘technology’.

The FBI was smart to pick this as a case, as it best possible chance of setting precedent. It involves terrorism, the phone belonged to the Government, and it was an older iPhone 5C that could still be brute forced. Apple it seems was a co-operative adversary when it chose to fight instead of comply, allowing the FBI to ‘choose the battlefield’.

After all, the civil rights movement of the 50’s and 60’s was also started out with a chosen test case. Rosa Parks wasn’t the first women to be charged for violating segregation laws, but her case was hand-picked by the NAACP as the one to pursue, because it had the right elements and told the most compelling story. The FBI couldn’t have picked a better set of facts for this case, except perhaps the one with the dead pregnant women, but the fact remains choosing the right case is important, because it allows you to control the narrative. This is just smart play on the FBI’s part.

The reality is that this case is about everything else except this one phone.

Syed Farook had other phones he severely damaged before the shooting, authorities suspect the damage was intentionally done to hinder investigations. This phone had a iCloud back-up dated just 3 weeks prior, the extraction (if allowed by law) would recover a very small time-window of data. Finally, he was a self-radicalized shooter that had no direct communications with ISIS, and at most could be considered a ISIS fanboy rather than a real member of the terrorist organization.

It is extremely unlikely that his phone has anything of real value, and James Comey is playing on people’s emotions rather when he says “we could not look the survivors in the eye if we did not follow this lead”. In fact, there should be a vast amount of information available to the FBI pertaining to all the terrorist phones (not just this one) in the form of meta-data, and if you think metadata is insufficient, please remember that the NSA has admitted to “killing people based on metadata”.

But there is a flip-side, because there isn’t a huge urgency to investigate this phone, it allows for the case to flow through the long and arduous legal battle. Which means, that no one would be forced to make hasty decisions. You don’t want the hard legal cases to involve matters of high urgency–so once again, good job FBI.

However, some don’t see it if the cyber-security community are divided on anything it’s Apple choosing to defend this particular case. Adi Shamir, the guy who puts the ‘S’ in RSA, has come out to say “comply this time and wait for a better test case to fight where the case is not so clearly in favor of the FBI”. He’s not siding with the FBI, he’s advocating better tactics to achieve the strategic objective.

Before we go further, let me first vindicate my personal hero, Bill Gates. He was initially blind-sided in an interview that the media headlined “Bill Gates supports the FBI”, he later clarified that a balance should be struck and he was siding with the FBI.

So if isn’t about this one phone, what is it about?

Susan Hennessey, former NSA Lawyer, and Lawfare Blog editor claims that this about whether the limits of investigations should be set by technology or by the law. I disagree with such a narrow framing, considering that if the technology exist, criminals will use it regardless of the law and ‘the law’ is also a piece of technology, but that people writing laws have a completely different outlook than the people writing code.

Others suggest this is purely about whether the government can compel a company to write code that will reduce the security of their products–and how far that compulsion can go. This is an equally narrow framing about the applicability of the AWA.

I think the actual answer is that this is about both issues at once, and to the reason this is complicated because the trade-off is not an easy one, nor is it immediately clear. To get better clarity, let’s first consider what exactly Apple has needs to do to comply with the court order, and whether that is an unreasonable burden.

Unreasonable Burden

The term ‘unreasonable burden’ comes up a lot, simply because it is the best legal defense to refuse a court order made under the all writs act.

Apple estimates it would take 6-10 engineers four weeks to writethea weakened iOS version, which doesn’t seem unreasonable for a company with $200 Billion in the bank.

But writing the code is just step one, the code would have to validated, tested, and finally documented (all of us who’ve worked in IT projects before know how big a burden documentation is). Then, it will have to be put through Apple’s iOS quality assurance, as everyone knows, even small changes to IT systems can have ‘ancillary’ effects on other aspects. Most IT folks supporting complex systems use the term “if it ain’t broke, don’t fix it” to describe their aversion into even minor touches to severely complex systems.

Once the iOS version is ready, and deployed on the ‘one phone’, Apple has to decide what to do with it. Either destroy the iOS version, and reboot the entire process for each and every law enforcement request, or secure “the software equivalent of cancer” at their facilities, trying their level best to ensure this cancer never ends up in the wrong hands. Both options are far more burdensome than 4 weeks of engineer time–in fact, one of them isn’t even an option anymore.

Just last week, the FBI was forced to reveal all the details of a hack it executed on playpen, a large pedophile hidden service. The FBI didn’t just hack playpen, they transferred the site over to their servers, and then begun infecting visitors to the site with specialized software to determine their IP addresses.

Now hosting child porn on federal government servers isn’t something anyone is comfortable with but the the good news is that this resulted in 137 people being charged with possession of child pornography.

The bad news is that for the defendants to get a fair trial, they deserve access to details of the hack including the code and servers. The ruling was quite simple, the Judge simply said that “it comes down to a simple thing, You say you caught me by the use of computer hacking, so how do you do it? … A fair question…the government should respond and say here’s how we did it” .

If a terrorist were ever charged with details from this or future iPhones, due to the weakend iOS allowing brute-force attacks, wouldn’t their defence counsels be entitled to look at that ‘hack’ and demand all it’s intricacies be revealed?

The questions thrown up by computer hacking in the context of civil liberties is both fascinating and disturbing, on the one hand trying to protect your civil liberties involves granting them to child molesters….and if we’re going to be talking about technology and civil liberties, let’s go all the way to the first amendment, the one about freedom of speech.

First Amendment : Code as Free Speech

Apple pushing forward the case that writing code is similar to writing anything else, and subjected to the same protections. In a country whose first constitutional amendment stipulates that no law shall be made prohibiting the freedom of speech–that’s an argument that goes straight to the core of American values.

The argument sounded whimsical to me, certainly the Lawyers immediately pointed out that you don’t have freedom to create ransom-ware and botnets, and the government can compel car companies to put seat-belts and airbags without violating their constitutional protections.

My discomfort comes from the fact that the entire argument relies on the very recent ‘discovery’ in America, that corporations are people, and are entitled to the same freedom of speech rights, which is wholly foreign to me, and quite frankly 99% of America as well. The only reason it stands is because 5 Supreme court judges out of 9 decided it was so.

But the EFF raised a valid point (God bless their digital souls), Apple isn’t just compelled to write code here, they’re being forced to digitally sign the code with their super-secret key. That super secret key is the reason why the FBI can’t write the code themselves, and is what keeps unauthorized software from running on iOS devices. The digital signature is a assertion from Apple to the iPhone that the code can be trusted and that it came from a trusted source (namely Apple Inc.). This is the equivalent of forcing Tim Cook to sign a document claiming that when no one is looking, he actually uses his Windows Phone for daily work.

No public U.S. court has ever compelled a private party to cryptographically sign code, and that’s really interesting. What’s even more interesting is the 5th Amendment argument.

Fifth amendment : The right against self-incrimination

“You have the right to remain silent”, all of us have heard this term on TV. But have you ever wondered what the right truly meant?

It means that the accused doesn’t have to answer any questions from the police officers, including questions about the Passcode to their iPhone. An accused in the US does not have to answer any questions the police have for him, or testify against himself, because of a right afforded under their countries 5th amendment.

Another way to look at this, is that police officers can search a house, a car, the clothes on someone, even force them to take an x-ray, but they cannot search the contents of your brain. That’s a place that law enforcement cannot go.

One of the questions, this case brings up is whether or not that place should be extended to the smartphone. On the one hand it seems odd that a right to free-thought should be extended to a smartphone, but we’ve unloaded SO much cognitive load to our smartphones (some don’t know how to navigate without waze) , that the idea of a smartphone being a extension of our brain seems natural and accurate.

Steve Jobs was quoted that computers are “bicycles for our brains”, and that analogy has become even more true with smartphones.

However, under American law, there is no place that is outside the ambit of law enforcement with a warrant, the brain (and only the human brain) seems to be the exception, but that’s because we never had the technology to implement a container for thoughts that no one (not even the government) could forcefully open. Courts have already ruled that passcodes are protected by the 5th Amendment, but not thumbprints.

So it’s interesting to see how these first and fifth amendment arguments will end up, but for now let’s return to the very crux of the matter–the security and back-door discussion.

Backdoors, going dark and other buzzwords

So here’s where I think a lot of it falls apart. Apple’s opinion that this is a backdoor isn’t supported by most of the industry. This is more a case of weakening the front door because the way Apple signs iOS packages mean that the package can be tailored made for just one phone.

Of course the dissenters would argue, that even with all the security it has in place, pushing a signed and weakend iOS version only provides more attack surface for future exploits–and that is true. More complicated code is less secure than simple code, and the most complicated code of all is having two versions of the same thing. The most technical competent amicus brief in this case, written by the likes of Bruce Schneier, Charlie Miller and Jonathan Zdiziarski, goes into this topic in detail.

In the ‘cryptographers’ brief, they point out that every prior version of Apple’s iOS has been jailbroken. In other words, all but the very latest version of iPhone operating systems have been hacked, so while Apple may rightfully enjoy a great reputation for building secure phones, even they have a pretty bad track record. Building secure code is very hard indeed, and it gets exponentially harder when you’re forced to build exceptional access into that code.

Apple though, are playing theatrics when the use the term backdoor, the entire industry agrees backdoors are bad, but no one has fully defined what a backdoor is (although Jonathan Zdiarski has tried).

But this is a PR battle and the FBI isn’t innocent either, besides the fact that it specifically chose this case for emotional and legal effect, it also uses terms to help its cause. Terms that sound right, but inaccurately describe the reality.

Terms like ‘going dark’.

The idea that as more data is being encrypted in transit (via end-2-end encryption) and at rest (Apple iPhones), law enforcement’s ability to run proper surveillance on criminals is diminishing, and soon they’ll be completely in the dark without any ability to tell what criminals are doing.

Except that the opposite is true. We’re entering a ‘golden age of surveillance’ and all of this ‘going dark’ hogwash is marketing speak for more federal dollars. The Berklett Cybersecurity project released a report, aptly titled “Don’t panic” in which it makes the argument that “going dark” is at the very least an gross exaggeration or completely untrue.

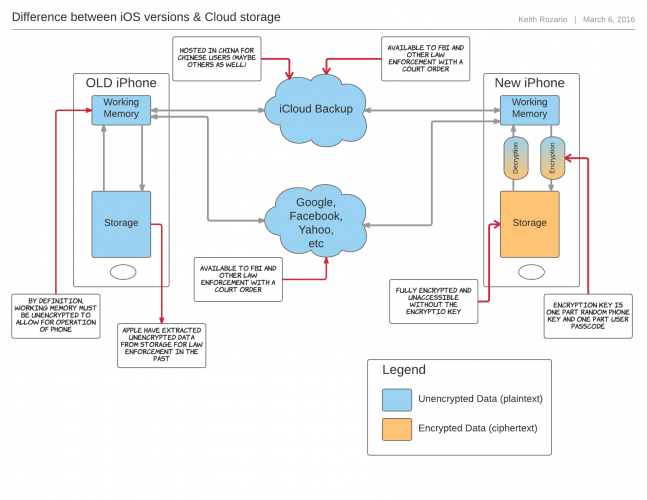

Firstly we have WAY more data on citizens today than we had 10 years ago, and while some of that data may be encrypted in walled gardens, most of it isn’t. There’s also a huge boom in metadata and internet of things device, and data in places like Google and Facebook aren’t encrypted simply because they need the data to sell them to advertisers. This data is available to law enforcement via a court order.

It’s also the reason Apple so easily provided the iCloud data to the FBI, before the San Bernadino county screwed things up. Even then, with all the License Plate Scanners, and CCTV cameras deployed nearly everywhere, there is far more surveillance available to law enforcement today than even 5 years ago.

The Berklett report concludes by saying:

In this report, we question whether the “going dark” metaphor accurately describes the state of affairs. Are we really headed to a future in which our ability to effectively surveil criminals and bad actors is impossible? We think not. The question we explore is the significance of this lack of access to communications for legitimate government interests. We argue that communications in the future will neither be eclipsed into darkness nor illuminated without shadow.

Going dark is to the FBI what Backdoor is to Apple. The PR speak further muddies the water in this already complex case, and I would prefer not to have it. It’s also worthwhile to note that Cyrus Vance, the same New York A-G with 175 iPhones just waiting to be unlocked, has vehemently denounced the outcome of the study–no prizes for guessing which side of the argument he’s on.

PR battles are important, because part of the solution is going to come from laws originating in congress, and that’s the branch of government most susceptible to public opinion. So whose winning the PR battle anyway?

Well, a pew research poll suggest that only 38% of Americans side with Apple, but Krisnadev Calamur of The Atlantic quickly pointed out that a reuters poll which structured its questions to offer more information, concluded that more Americans side with Apple. It’s unsurprising that for an issue this complex and technical, the amount of the information the general population has, can sway their opinion immensely.

So PR is going to be a feature of this discussion whether we like it or not, and the decisions made by the American people is going to affect, not just Americans, but everyone else as well.

And speaking of everyone else, anyone know what China is going to do?

What foreign governments might do

It’s accepted that whatever Apple does for the American government, it will have to do for the Chinese, or the Saudis, or the Malaysians. Apple cite the arrest of a Facebook employee in Brazil, because they refused to grant the government access to Whatsapp communications as an example of what might happen if they comply in the US and refuse to comply with other governments.

Obviously iPhones aren’t cheap, but for a lot people in a lot of despotic countries, getting security and privacy from their governments through an iPhone purchase is a very small price to pay indeed.

Critics like Stewart Baker (former general counsel of the NSA), are quick to point out that Apple is already complying to the demands of the Chinese government, firstly by moving iCloud infrastructure inside mainland China, and secondly deploying a chip with custom made technology (called WAPI), whose inner workings and security features are known only to the Chinese.

Now, the WAPI bit looks terrible on Apple, and we have to slap them on the wrist for that one, but that was the iPhone4 and we’ve come a long way since then. The point about iCloud data in China is true, but it is also a red-herring.

Firstly, iCloud isn’t encrypted, and the Chinese government can (very possibly) have easy access to all their citizens iCloud backups. But, that is true in the US and possibly every other country Apple operates in.

Apple handed over the iCloud back-ups the moment a court order was given, and the only reason it is insufficient is because the FBI ordered the San Bernadino county to reset the password thereby disabling the automatic backup procedure. Had they not done that, none of this would be necessary.

So remember future drug-lords, switch off your iCloud backups before you start your empire–oh and avoid interviews with Sean Penn as well.

As a last note though, this is also the reason why Apple could comply with the previous 70 request, because they were not bypassing encryption, they merely extracted plain-text data either from the cloud or the memory of the iPhone. In iOS9, the entire contents of the phone is encrypted, which changes the make-up of the request.

Google, Facebook and twitter, all of whom have un-encrypted data of their users also regularly comply to court orders for user data. Even Apple can’t fully encrypt their iCloud data, simply because if everything were truly encrypted, then a loss of the encryption would permanently render all backups useless. For 99% of Apple customers, losing all data because they lost their password is simply unacceptable–so the FBI can breathe a sigh of relief.

Conclusion

I have no one liner conclusion to this story, it simply refuses to be simplified. It is truly a complicated issue of technology and policy that requires sound understanding of both to thoroughly understand. But here’s two final points to complete this 3000 word post.

The first is that the cybersecurity folks, specifically the cryptographers seem to be approaching this problem with an absolutist mindset, and that isn’t helpful to the discussion. But cryptography is applied mathematics, and maths IS absolute. Cryptographers have maintained that exceptional access to end-2-end encryption can’t be secure, and that is an absolute statement that is true, and proven to be true, and for policy makers to want to have discussions about it is akin to them asking for a discussion to make 2+2 = 5.

The second point is that this is a very very complex solution, with no immediate solution. Apple and various politicians have proposed a cybersecurity commission, but many point out that in Washington, commissions are where good ideas go to die.

Hopefully this conversation provides us some answers before anything goes to die.

Post-Script : Physical access isn’t enough

For a long time us cybersecurity folks have had a maxim, that if hackers had physical access to your computer then there’s nothing you can do to protect yourself. That’s why big corporations never let you into their datacenter, because if you had physical access to their servers, none of their protections would suffice.

That however is slowly changing, and Apple is leading the way. It is an engineering marvel that they can build a phone that is so hard for attackers to get at even with physical access, and now they seem to be working on a phone that would be 100% Apple-proof. This is hard to do, and generations of ahead of what other industry (for example automative and aviation) are capable off, but why just Apple?

Apple is unique, it sells hardware AND software. While others sell ads, Apple sells phones and laptops, which means that for Apple–you are the customer, while for Facebook and Google, you are the product they sell to their real customers–the advertisers.

Facebook can’t encrypt the data from itself, because it’s entire business model is premised on them getting as much data as they can from you. Apple’s unique position means it is the tech company most likely to take this fight head on, and I have to salute them for it.